Ever since the Play framework came onto the scene, I’ve been sold on the idea of containerless deployment. The old model of a bunch of apps deployed in a single container sharing resources and Java EE components just never really materialized. Thanks to the thriving Grails plugin community, we have the Standalone App Runner plugin that makes containerless deployment dead simple for Grails apps. But what would you be giving up in terms of performance if you move away from a commercial enterprise-y app server like WebLogic in favor of embedded Tomcat or Jetty? As it turns out, absolutely nothing.

The Test

I ran a simple benchmark to compare the performance of the same Grails app deployed on Weblogic, embedded Tomcat, and embedded Jetty (both via the Standalone App Runner plugin, version 1.1.1). The app is a simple stateless REST service that returns a JSON payload representing an order from the default Grails embedded H2 in-memory database. Each test consisted of a batch of 20K requests, and all tests were run with 10, 20, 40, and 80 threads in JMeter with no wait time between requests. Each test set was run 10 times to get an average.

The Setup

Each version of the app was deployed on the same physical server (a Dell something or other running Solaris…) with WebLogic 10.6.3 installed. A single instance of each container was run. Load was generated from a MacBook Pro running JMeter. During the test the server never got above 70% CPU utilization, and the load generator never got above 80% utilization.

The Results

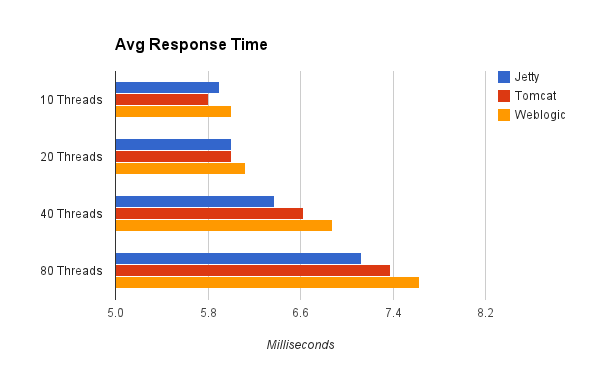

Response Time

The above chart shows raw response time as recorded from JMeter for a GET request to the service (shorter bars indicate better performance). Interestingly, both Jetty and Tomcat outperformed Weblogic across the board. At 10 threads, Tomcat had the fastest response times. At 20 threads, Tomcat and Jetty were even ahead of WebLogic. At 40 and 80 threads, Jetty was the clear leader followed by Tomcat and Weblogic. So, it seems there’s something to the argument that a stripped-down, lightweight servlet container should be able to outperform a bloated full-featured commercial server like Weblogic.

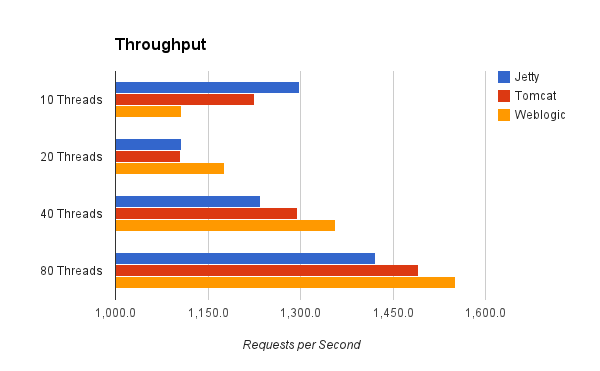

Throughput

It seems logical that a super lightweight server like Jetty should be faster than Weblogic in terms of raw speed, but what about throughput? Here the numbers tell a slightly different story.

The chart above shows average throughput in requests per second (longer bars are better). At lower levels of concurrency, both Tomcat and Jetty were able to churn through more requests per second than Weblogic, with Jetty showing a clear lead at 10 threads. However, at both 40 and 80 threads, Weblogic gained an edge followed by Tomcat and then Jetty. This seems to suggest that perhaps the Weblogic’s thread management mechanism was optimized for throughput at the expense of raw speed – a logical design choice for an enterprise product like Weblogic.

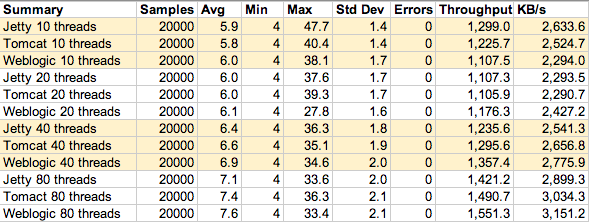

The Data

The table above shows a summary of the raw data across all of the test runs. It’s worth noting that in the hundreds of thousands of highly concurrent requests run during the course of this test not a single one resulted in an error. This was by no means a long-duration torture test, but it does show that there were no obvious differences in reliability between the containerless options tested and Weblogic.

Conclusion

In my opinion, the arguments for going containerless speak for themselves. What could be more simple from an operations standpoint than dropping a jar file on a bare OS and running it – no server installation or configuration to bother with. The purpose of this test was to see whether you would be sacrificing something significant in moving to the containerless model from a more traditional enterprise deployment model. The answer is a resounding no. In raw speed tests, the containerless model outperformed Weblogic across the board. In throughput tests, Weblogic claimed an edge at higher levels of concurrency. However, keep in mind that the margins involved in declaring victory for any server over the others were tiny in all cases. Realistically, you would probably never notice a difference in performance between the deployment scenarios tested. And that was the point. I, like many people steeped in “enterprise” culture, held the illogical belief that Weblogic, a big commercial product supported by practically unlimited resources, just had to perform significantly better (think 2x) than a simple embedded servlet container. Luckily, that just isn’t the case.

11 Responses to “Containerless Deployment Performance Showdown”